Portfolio…

(TierOne program elements. From upper-left, counter-clockwise: mobile nitrous oxide delivery system (MONODS), rocket motor test stand trailer (TST), SpaceShipOne, the SCUM (Scaled Composites unit mobile), and WhiteKnightOne.)

Please see my resume here .

VirtuePlay (1999-2001, and 2006-2009)

Before founding VirtuePlay, my future co-founders and I delved into creating multiple prototypes of game engines and tools. We shared a common frustration with the limitations of existing game development tools and the unfavorable working conditions prevalent in the game industry at that time. Guided by the belief that concepts like WYSIWYG (What You See Is What You Get) and rapid-application development could revolutionize game development, we created a novel, full-featured game development platform.

In early 2000, we made the pivotal decision to transform our side projects and ideas into a full-fledged company. With the support of investors, we dedicated the initial years to refining our core technology and crafting compelling demos. Through our investors’ connections, we gained privileged access to cutting-edge hardware and unique datasets.

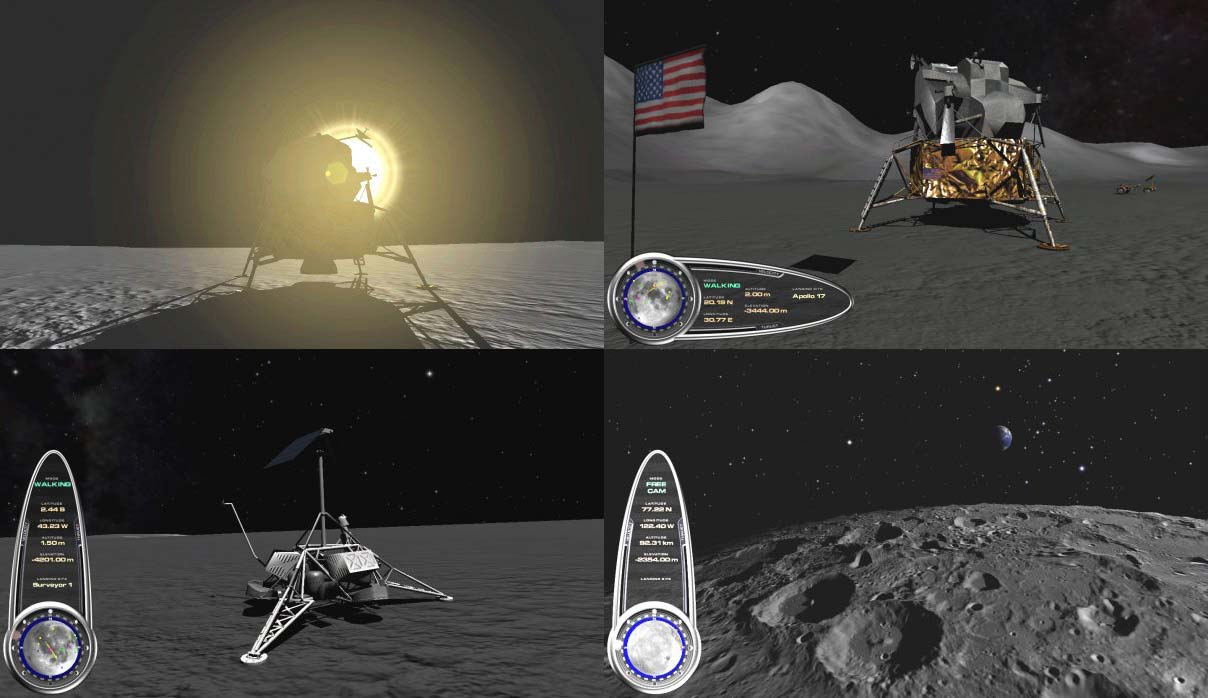

Lunar Explorer, circa 2002. One of my primary contributions to Lunar Explorer was the real-time, planetary-scale terrain rendering system based on ROAM (real-time optimally adapting mesh) .

Eager to showcase the impressive capabilities of our technology, we embarked on an ambitious endeavor: building a fully-immersive, interactive virtual reality simulation of the Moon. Our simulation allowed users to explore the lunar surface, navigate its terrain, and interact with objects of interest in orbit. Utilizing accurate terrain and imagery sourced from NASA’s Clementine and Lunar Prospector , we meticulously recreated US and Russian landing sites, complete with detailed artifacts. To enhance the immersive experience, we incorporated a military-grade stereoscopic head-mounted display (HMD) developed by Rockwell, along with advanced 9-DOF sensors for tracking head and body movement. It was a remarkable achievement, particularly considering that this was more than a decade before the emergence of Oculus.

Our virtual reality Moon simulation garnered significant attention and was showcased at various events and trade shows, including those sponsored by NASA. Even the renowned astronaut “Buzz” Aldrin paid us a visit to personally experience our creation.

Click here to watch the LRC trailer video.

While we licensed our technology to several game companies and developed numerous impressive demos over the years, we had yet to release a full-scale game of our own. Focused on realizing our aspirations, we embarked on an intensive development journey to create our flagship title, Lunar Racing Championship.

SpaceShipOne (2001-2005)

SpaceShipOne hanging next to the Spirit of St. Louis and the Bell X-1 at the Smithsonian National Air and Space Museum in Washington, D.C.

SpaceShipOne was a pioneering spacecraft designed by Burt Rutan’s company, Scaled Composites. It gained significant recognition as the first privately built manned spacecraft to reach the edge of space. In 2004, SpaceShipOne successfully completed two suborbital spaceflights within a span of two weeks, winning the $10 million Ansari X Prize .

The spacecraft utilized a unique hybrid rocket motor and a unique design, featuring a “feathering” mechanism that allowed it to re-enter the Earth’s atmosphere safely. It was carried aloft by a specially designed carrier aircraft called WhiteKnightOne, which released SpaceShipOne at high altitude. After separation, the spacecraft ignited its rocket motor and ascended to suborbital space, reaching heights above 100 kilometers (62 miles).

SpaceShipOne’s accomplishments marked a significant milestone in the development of commercial space travel. It demonstrated the viability of privately funded space ventures and paved the way for subsequent projects such as Virgin Galactic’s SpaceShipTwo. The success of SpaceShipOne also highlighted the ingenuity and entrepreneurial spirit within the aerospace industry, inspiring a new wave of interest and investment in commercial spaceflight.

Click to watch a brief (7 min.) documentary about SpaceShipOne.

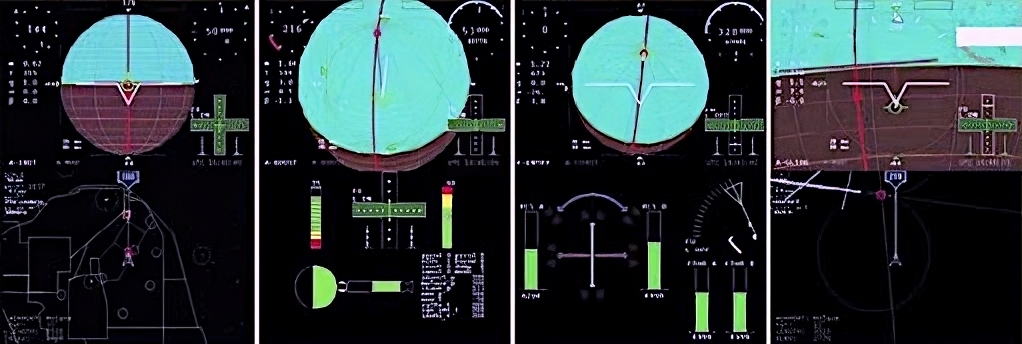

I was entrusted with the formidable task of designing and developing spacecraft avionics, specifically the Flight Director Display (FDD). With utmost dedication, my primary focus was to collaborate closely with test pilots and aerospace engineers to craft an exceptional user experience and interfaces that catered to all flight modes. Drawing upon my background as a pilot and prior exposure to aerospace engineering, I was able to achieve a successful outcome. Throughout the process, we underwent numerous iterations, subjecting the system to both simulated and real in-flight testing. Implementing the avionics in C/C++ and assembly, we employed a significant amount of SIMD code to optimize performance, both for display graphics and flight director logic. Additionally, my contributions extended to various other areas, including the SpaceShipOne flight simulator , Signals and Navigation Unit (SNU) , as well as data acquisition and ground telemetry systems.

SpaceShipOne and WhiteKnightTwo captive carry flight.

SpaceShipOne cockpit with the Flight Director Display (FDD) at center.

SpaceShipOne FDD graphics. From left to right, modes of flight: drop, boost, coast/reentry and glide.

After winning the Ansari X Prize, our focus immediately shifted to SpaceShipTwo and WhiteKnightTwo development for the Virgin Galactic space flight company.

PRIME (2009)

Click to watch the PRIME product review video.

The Predator/Reaper Integrated Mission Environment, known as “PRIME,” originated as a program sponsored by the Air Education and Training Command (AETC) based at Randolph Air Force Base, Joint Base San Antonio, Texas. Its primary objective was to address the challenge of limited access to comprehensive Reaper and Predator simulators, particularly for fundamental Remotely Piloted Aircraft (RPA) training. The goal of PRIME was to create a cost-effective, networked PC hardware configuration that emulates the functions of an MQ-9 Reaper Ground Control Station (GCS) for both Pilots and Sensor Operators (SO), suitable for classroom or laboratory settings.

PRIME employed a scalable software architecture that catered to various deployment scenarios, ranging from solo, off-line task-training with a single laptop to a full-fledged, multi-computer simulation environment for combined pilot, SO, and instructor training. The software accurately replicated all displays, menus, and functionalities of the MQ-9 Reaper systems, including weapons, EO/IR sensor operations, satellite delay, and more, ensuring an authentic experience.

In order to handle the complexity of multiple interacting systems and maintain a manageable software development timeframe, I devised a unique architecture. This architecture featured a visual programming environment incorporating a flow-parallel runtime, an entity-component-system (ECS)-based compositional architecture, and Harel state-charts for conditional logic. The runtime core utilized series-parallel, partial ordering to transform discrete system data flow graphs, created by either programmers or designers, into a single Directed Acyclic Graph (DAG) definition. This approach optimized data parallelism for real-time simulation execution. The entire MQ-9 system, including the ground control system (GCS), flight model, physics engine, and graphical user interface (GUI), was fully implemented within this architecture.

The PRIME simulator has been instrumental in training numerous pilots and sensor operators, and continues to be operational to this day. It has proven to be a valuable tool in enhancing the training experience and preparing personnel for their roles in operating and controlling Predator and Reaper drones.

DARPA PCAS (2011)

The Persistent Close Air Support (PCAS) program, initiated by the Defense Advanced Research Projects Agency (DARPA), aimed to improve the effectiveness and efficiency of close air support missions. Close air support plays a critical role in providing air power support to ground troops engaged in combat. The PCAS program sought to develop advanced technologies and capabilities to enhance the coordination and communication between ground forces and aircraft, enabling more accurate and timely delivery of air support.

The PCAS program focused on three key objectives: improving situational awareness, reducing the time required to deliver air support, and minimizing the risk of friendly fire incidents. To achieve these goals, DARPA explored innovative approaches such as leveraging networked communications, real-time sensor data fusion, advanced targeting systems, and autonomous systems.

The program involved the development of advanced software and hardware components that could be integrated into existing aircraft and ground systems. These components aimed to provide ground forces with enhanced battlefield awareness, including the ability to quickly and accurately identify friendly forces, enemy targets, and potential collateral damage risks. Moreover, PCAS aimed to streamline the process of requesting and coordinating air support, reducing the time required for aircraft to respond to requests and engage targets.

By leveraging emerging technologies and advanced capabilities, the PCAS program sought to revolutionize the close air support paradigm, making it more effective, efficient, and safe. The research and development efforts under PCAS aimed to provide warfighters with the tools and systems necessary to better coordinate and execute close air support missions, ultimately enhancing the effectiveness of military operations on the ground.

In response to the challenges and opportunities presented by the DARPA PCAS program, our team proposed an innovative and adaptive simulation environment built upon the foundation of PRIME. Our approach focused on utilizing an agile-based methodology, enabling iterative design and development of both software and hardware components. Crucially, we sought to incorporate direct feedback from Joint Terminal Attack Controllers (JTACs) using our system, allowing us to refine and enhance our solution based on real-world operational requirements.

While our proposal may have seemed ambitious, especially considering our lack of previous success in TTO programs and the presence of larger competitors, it proved to be successful. The DARPA Program Manager shared our belief that an initial sprint using software simulation, combined with hardware in-the-loop testing, was the most effective approach to develop a Minimum Viable Product (MVP) before committing significant resources to physical implementation.

As a result, our team was selected as a Task “B” performer, providing optional support to the two competing Task “A” performers. Throughout our period of performance, we maintained close collaboration with these established industry leaders, leveraging their expertise while also contributing to subsequent program phases as the Task “A” performer was down-selected.

This collaborative approach, guided by an agile mindset and driven by the valuable input from JTACs, allowed us to navigate the competitive landscape successfully. By embracing software simulation and hardware integration in an iterative manner, we were able to develop a robust and adaptable solution while maximizing efficiency and cost-effectiveness. This experience served as a testament to the power of innovation and collaboration in tackling complex challenges within the defense industry.

Me and a soon-to-be autonomous A-10C.

AFRL ATAK for PCAS

Prototype PCAS-specific additions to ATAK

AFRL ATAK, or Air Force Research Laboratory Android Team Awareness Kit, is a mobile software application developed by the U.S. Air Force Research Laboratory. It provides advanced situational awareness capabilities for military personnel and first responders operating in challenging environments.

ATAK integrates various data sources, including mapping, GPS, video feeds, and sensor inputs, into a single user-friendly interface. This enables real-time tracking of friendly forces, sharing of tactical information, and coordination of operations. Users can view maps, overlay custom layers, communicate via secure messaging, and access critical mission data on their mobile devices.

The application supports interoperability with other military systems, allowing seamless integration with existing command and control infrastructure. ATAK also features advanced collaboration tools, enabling users to establish ad-hoc networks and share information with teammates in real-time, enhancing operational coordination and decision-making.

AFRL ATAK has been widely adopted by military personnel, law enforcement agencies, and emergency responders due to its versatility, ease of use, and powerful situational awareness capabilities. It has proven valuable in a wide range of operational scenarios, including reconnaissance missions, disaster response, and security operations.

In my role, I utilized the AFRL ATAK framework as the foundation for our tablet user interface (UI) development in the PCAS program. As the primary researcher, designer, and developer, I leveraged ATAK to create prototypes that showcased specific workflows and user interface features tailored to PCAS requirements.

By harnessing the capabilities of ATAK, I designed intuitive and user-friendly interfaces for the tablet devices used in the PCAS program. These interfaces facilitated seamless interaction with the system and enabled the execution of key functionalities. Through iterative design and development cycles, I refined the tablet UI to align with the unique needs and operational context of PCAS.

By integrating PCAS-specific workflows and UI elements into the ATAK framework, we were able to demonstrate the effectiveness and potential of the PCAS system. This approach allowed us to gather valuable user feedback and refine the UI design to enhance usability and optimize user experience.

My work with the ATAK framework and tablet UI development played a crucial role in showcasing the capabilities of the PCAS program and its potential impact on mission effectiveness and operational efficiency.

DARPA Plan X (2012)

Plan X functional prototype featured at DARPA Demo Day 2016

DARPA’s Plan X program was initiated with the goal of advancing the field of cyber warfare and addressing the complexities of modern networked environments. The program aimed to develop innovative technologies and tools that would provide military operators with improved situational awareness, mission planning, and execution capabilities in the cyber domain.

Plan X focused on developing a user-friendly, visual interface that would enable operators to understand and control the cyber battlespace effectively. The program aimed to create a platform that would integrate various cyber tools, data sources, and visualization techniques into a cohesive and comprehensive system. This system would facilitate the identification of potential cyber threats, the visualization of cyber assets and their relationships, and the planning and execution of offensive and defensive cyber operations.

Through the Plan X program, DARPA sought to enhance the capabilities of military personnel in cyberspace and improve their ability to effectively defend against cyber attacks and conduct cyber operations. The program encouraged research and development efforts in areas such as cyber situational awareness, visualization, secure collaboration, and robust cyber toolsets.

Plan X represented a significant step forward in the military’s approach to cyber warfare, emphasizing the importance of understanding and controlling the cyber battlespace. The program aimed to provide operators with the necessary tools and technologies to navigate complex cyber environments, identify potential threats, and execute cyber operations with precision and effectiveness.

In late 2011, we embarked on the development of the Plan X proposal with a specific focus on securing Task Area (TA) 5: “Intuitive Interfaces,” and also offering support for TA1: “Infrastructure.” Our proposal was successful, and we were awarded both task areas. I was honored to be selected as the Principal Investigator (PI) for the Intific effort, entrusted with the responsibility of meeting the DARPA PM’s objectives, collaborating closely with the SETAs, gathering requirements, and guiding the design and development of critical program features.

Plan X was a highly intricate program encompassing numerous task areas and performers. It presented a significant challenge due to its complexity and the diverse expertise required. As the PI, I played a central role in ensuring that our team effectively addressed the demands of the DARPA PM, collaborated seamlessly with the SETAs, and successfully delivered on the program’s goals. It required meticulous coordination, strong leadership, and a deep understanding of the program’s requirements to navigate the multifaceted landscape of Plan X.

Some articles about Plan X:

- DARPA’s Plan X Gives Military Operators a Place to Wage Cyber Warfare

- DARPA’s Quest for a Beneficent Cyber Future

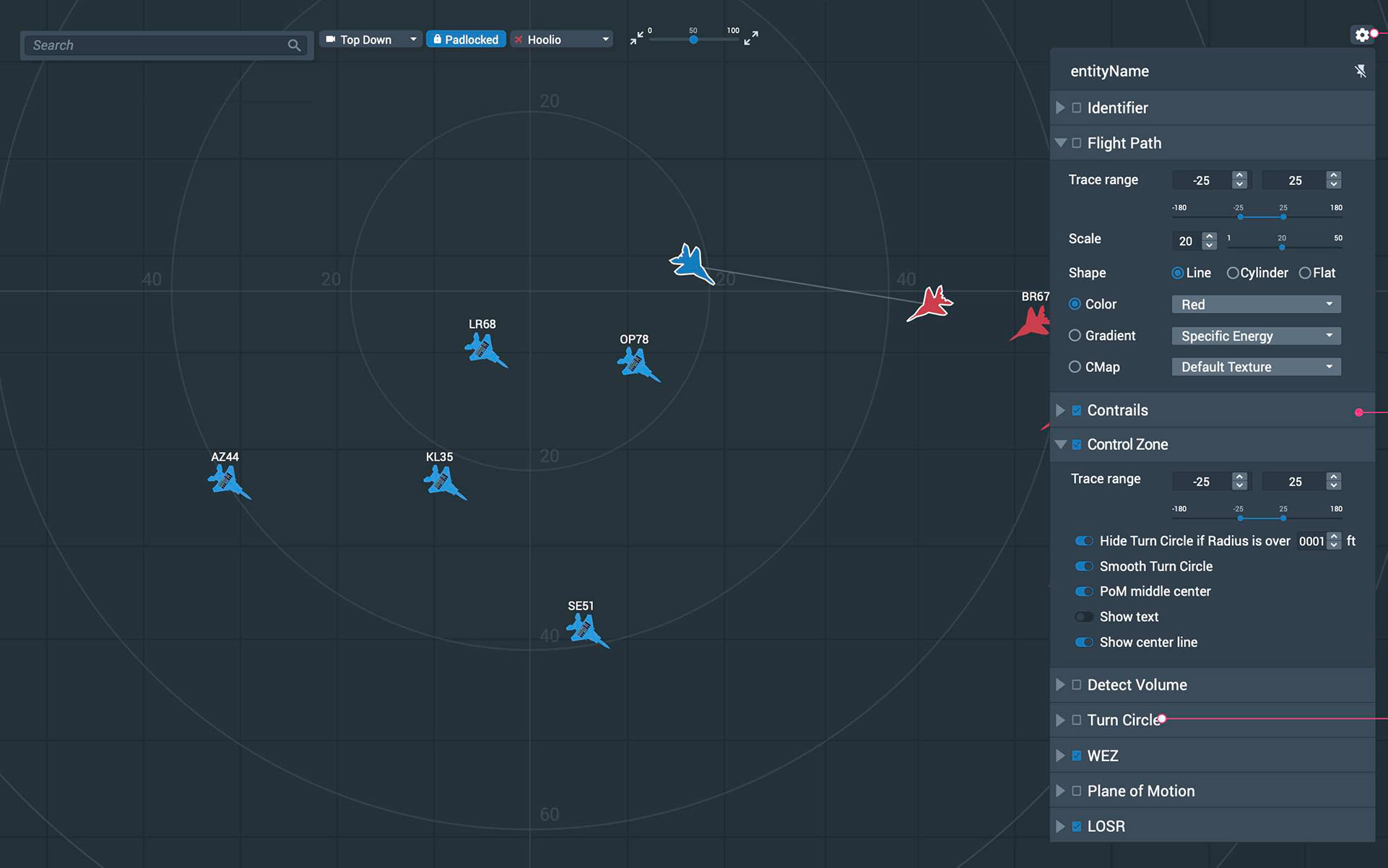

Next-Gen Air Combat Maneuvering Instrumentation System (2016)

Click here to watch a video about Cubic’s ACMI system.

Cubic’s Air Combat Maneuvering Instrumentation (ACMI) system is a cutting-edge technology designed to enhance training and evaluation for military aviation operations. ACMI provides real-time tracking, monitoring, and data collection capabilities during aerial combat training exercises. The system integrates advanced sensors, data links, and ground-based infrastructure to capture and analyze critical flight parameters, including aircraft position, speed, altitude, and weapon systems engagement.

By accurately recording and replaying the simulated combat scenarios, Cubic’s ACMI system enables pilots and mission planners to assess performance, tactics, and decision-making in a highly realistic training environment. The system offers comprehensive after-action review capabilities, allowing users to analyze flight data, debrief missions, and identify areas for improvement. ACMI also supports the integration of multiple platforms, facilitating joint training exercises and enhancing interoperability between different aircraft and units.

Cubic’s ACMI system has been widely adopted by military organizations around the world, providing valuable insights and enhancing the effectiveness of aerial combat training. Its advanced features and robust performance make it a trusted solution for improving operational readiness, pilot proficiency, and mission success.

In 2014, Intific became part of the Cubic Corporation and was integrated into Cubic Global Defense Systems. Among the wide range of projects within Cubic, their flagship offering is the Air Combat Maneuvering Instrumentation (ACMI) system. ACMI is a vital tool used by the United States Air Force (USAF) and allied forces to monitor and track aircraft during air combat training exercises like Red Flag. Think of it as an advanced version of laser tag, but on a much larger scale. In fact, Cubic’s initial ACMI system was featured in a post-air combat maneuvers debrief scene in the movie “Top Gun.” Cheesy, indeed.

‘If you think, your dead.’ – Maverick

My main objective was to modernize the aging second-generation ACMI Individual Combat Aircrew Display Systems (ICADS). This was an exciting opportunity that presented numerous challenges.

To accomplish this, I decided to develop the next-generation ICADS system as a cutting-edge web application, leveraging the latest technologies and drawing from my experience in developing complex user interfaces and visualizations for projects like Plan X. The core UI and application logic were implemented using React and Redux, providing a modern and efficient foundation. WebGL and WebAssembly played a crucial role in delivering high-performance 3D rendering and handling performance-critical “main-loop” code. This approach proved to be remarkably successful in revitalizing the ACMI system.

The next-gen ICADS web interface is capable of rendering multiple complex visualizations at 60 FPS.

The next-gen ICADS system is highly modular to meet special use cases. Here’s a screen shot of the Range Training Officer (RTO) display.

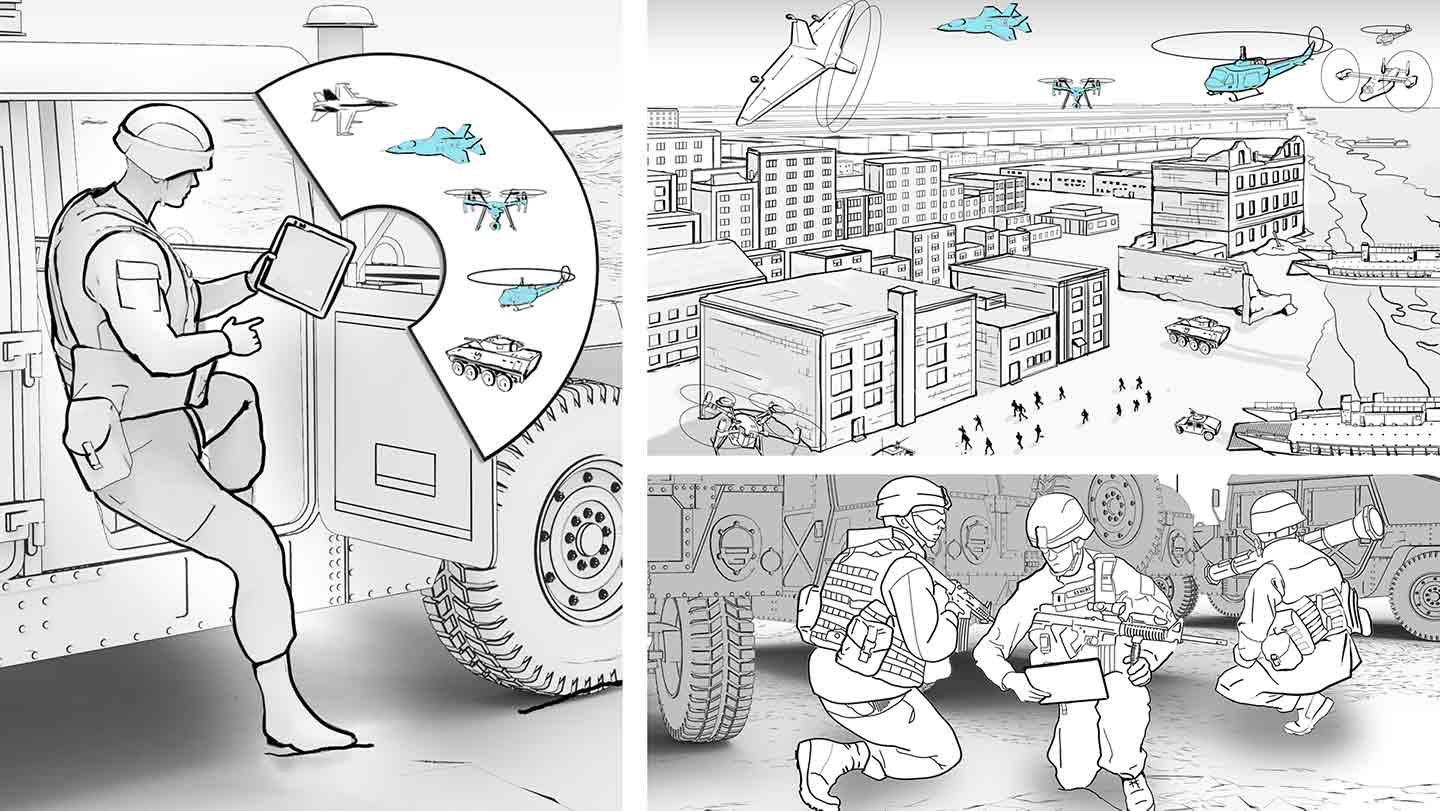

DARPA PROTEUS (2017)

PROTEUS concept art.

DARPA’s PROTEUS program, also known as the Prototype Resilient Operations Testbed for Expeditionary Urban Operations, is an initiative focused on developing advanced technologies and strategies for conducting military operations in urban environments. Urban areas present unique challenges, including complex terrain, dense populations, and a multitude of potential threats, making them particularly difficult for military forces to operate effectively.

The goal of the PROTEUS program is to enhance the resilience, adaptability, and effectiveness of military operations in urban settings by exploring innovative approaches and technologies. This includes developing new tools, systems, and methodologies that can improve situational awareness, facilitate decision-making, and enable rapid adaptation to changing circumstances.

PROTEUS brings together interdisciplinary teams comprising researchers, engineers, and operational experts to collaborate on developing and testing new concepts and technologies. The program emphasizes the integration of advanced sensing technologies, data analytics, autonomous systems, and human-machine interfaces to create a comprehensive operational testbed for urban operations.

By leveraging cutting-edge technologies and conducting realistic experiments and simulations, PROTEUS aims to advance the understanding of urban warfare dynamics and provide valuable insights for military planners and operators. Ultimately, the program’s outcomes will contribute to the development of more effective strategies and capabilities for expeditionary urban operations, enhancing the military’s ability to operate in complex urban environments while ensuring the safety and success of personnel on the ground.

Some articles about PROTEUS:

- DARPA’s PROTEUS program gamifies the art of war

- DARPA’s PROTEUS completes successful experimentation with USMC

Project IKE (2018)

As the Cyber National Mission Force (CNMF) undergoes a transition to a unified command structure, there is a growing need for effective tools to monitor the readiness, status, and activities of the numerous cyber operators involved. Additionally, CNMF leaders require a comprehensive situational awareness platform that consolidates cyber threat indicators, known compromises, and supports the development of strategic plans. Recognizing the potential of the Defense Advanced Research Projects Agency’s Plan X, the OSD Strategic Capabilities Office (SCO) initiated Project IKE as a prototype solution to address these needs. I played a key role in advocating for the adoption of Plan X as the preferred command and control (C2) solution for the OSD SCO, U.S. Air Force, and Space Force, making substantial contributions to the project’s success.

Some articles about Project IKE:

- Air Force takes ownership of DARPA cyber warfare program

- Air Force, Strategic Capabilities Office Aim to Develop Cyber Planning Tool Via ‘Project IKE’

Optios (Present)

Optios stands at the forefront of the burgeoning neuroperformance industry, leveraging over a decade of expertise gained from DARPA projects, substantial proprietary research investments, and strong collaborations with esteemed global organizations. With a clear vision, Optios is committed to constructing an intellectual framework and platform that paves the way for the next stage of human advancement. By harnessing cutting-edge technologies and insights, Optios is dedicated to driving human development to new frontiers.

Please see my resume here .